The #1 Deepseek China Ai Mistake, Plus 7 Extra Lessons

페이지 정보

작성자 Rosie 작성일 25-02-13 10:23 조회 3 댓글 0본문

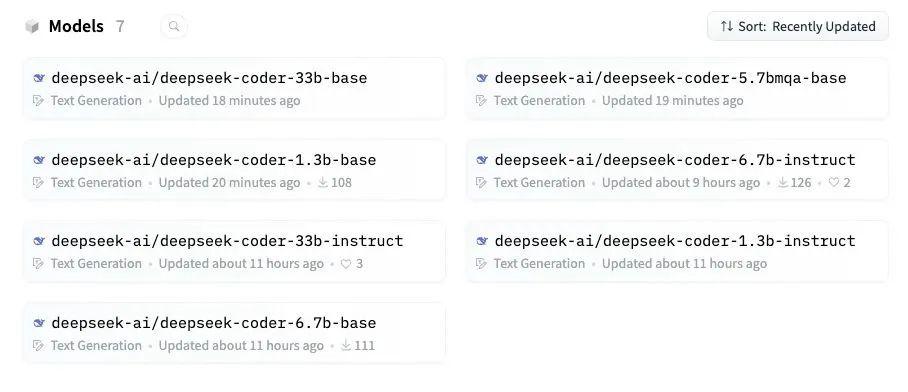

To download from the primary department, enter TheBloke/deepseek-coder-6.7B-instruct-GPTQ within the "Download mannequin" box. Under Download custom mannequin or LoRA, enter TheBloke/deepseek-coder-6.7B-instruct-GPTQ. If you want any customized settings, set them and then click on Save settings for this mannequin followed by Reload the Model in the highest right. The draw back, and the rationale why I don't checklist that as the default option, is that the information are then hidden away in a cache folder and it is harder to know where your disk area is getting used, and to clear it up if/if you need to take away a obtain model. For prolonged sequence fashions - eg 8K, 16K, 32K - the mandatory RoPE scaling parameters are learn from the GGUF file and set by llama.cpp robotically. Before Tim Cook commented at this time, OpenAI CEO Sam Altman, Meta's Mark Zuckerberg, and many others have commented, which you'll read earlier in this stay blog. On AIME 2024, it scores 79.8%, barely above OpenAI o1-1217's 79.2%. This evaluates superior multistep mathematical reasoning. In May 2024, DeepSeek launched the DeepSeek-V2 series. This is probably not a whole checklist; if you already know of others, please let me know! K), a lower sequence size may have for use.

To download from the primary department, enter TheBloke/deepseek-coder-6.7B-instruct-GPTQ within the "Download mannequin" box. Under Download custom mannequin or LoRA, enter TheBloke/deepseek-coder-6.7B-instruct-GPTQ. If you want any customized settings, set them and then click on Save settings for this mannequin followed by Reload the Model in the highest right. The draw back, and the rationale why I don't checklist that as the default option, is that the information are then hidden away in a cache folder and it is harder to know where your disk area is getting used, and to clear it up if/if you need to take away a obtain model. For prolonged sequence fashions - eg 8K, 16K, 32K - the mandatory RoPE scaling parameters are learn from the GGUF file and set by llama.cpp robotically. Before Tim Cook commented at this time, OpenAI CEO Sam Altman, Meta's Mark Zuckerberg, and many others have commented, which you'll read earlier in this stay blog. On AIME 2024, it scores 79.8%, barely above OpenAI o1-1217's 79.2%. This evaluates superior multistep mathematical reasoning. In May 2024, DeepSeek launched the DeepSeek-V2 series. This is probably not a whole checklist; if you already know of others, please let me know! K), a lower sequence size may have for use.

Ideally this is similar because the mannequin sequence length. Note that a decrease sequence length doesn't limit the sequence size of the quantised model. Sequence Length: The size of the dataset sequences used for quantisation. It solely impacts the quantisation accuracy on longer inference sequences. True results in better quantisation accuracy. 0.01 is default, however 0.1 results in barely higher accuracy. Higher numbers use much less VRAM, however have decrease quantisation accuracy. The model will robotically load, and is now ready for use! Some GPTQ shoppers have had issues with models that use Act Order plus Group Size, but this is mostly resolved now. It is strongly advisable to use the textual content-era-webui one-click-installers except you are sure you realize the way to make a guide install. It's advisable to use TGI model 1.1.0 or later. You should utilize GGUF fashions from Python using the llama-cpp-python or ctransformers libraries. Gemini 2.Zero advanced came up with your seasoned B2B email advertising and marketing knowledgeable, generate a list of key details and best practices, explain how you utilize every point. Examples of key performance measures can guide this course of.

Ideally this is similar because the mannequin sequence length. Note that a decrease sequence length doesn't limit the sequence size of the quantised model. Sequence Length: The size of the dataset sequences used for quantisation. It solely impacts the quantisation accuracy on longer inference sequences. True results in better quantisation accuracy. 0.01 is default, however 0.1 results in barely higher accuracy. Higher numbers use much less VRAM, however have decrease quantisation accuracy. The model will robotically load, and is now ready for use! Some GPTQ shoppers have had issues with models that use Act Order plus Group Size, but this is mostly resolved now. It is strongly advisable to use the textual content-era-webui one-click-installers except you are sure you realize the way to make a guide install. It's advisable to use TGI model 1.1.0 or later. You should utilize GGUF fashions from Python using the llama-cpp-python or ctransformers libraries. Gemini 2.Zero advanced came up with your seasoned B2B email advertising and marketing knowledgeable, generate a list of key details and best practices, explain how you utilize every point. Examples of key performance measures can guide this course of.

Within the software program world, open source implies that the code can be used, modified, and distributed by anybody. Multiple GPTQ parameter permutations are supplied; see Provided Files under for details of the choices supplied, their parameters, and the software used to create them. Multiple quantisation parameters are offered, to allow you to choose the very best one in your hardware and necessities. These files had been quantised utilizing hardware kindly offered by Massed Compute. Provided Files above for the listing of branches for each possibility. See beneath for directions on fetching from totally different branches. Reports by state-sponsored Russian media on potential army makes use of of AI elevated in mid-2017. The report estimated that Chinese army spending on AI exceeded $1.6 billion every year. Caveats - spending compute to assume: Perhaps the only vital caveat here is knowing that one purpose why O3 is so significantly better is that it prices more cash to run at inference time - the flexibility to utilize take a look at-time compute means on some issues you may flip compute into a greater answer - e.g., the highest-scoring model of O3 used 170X more compute than the low scoring model. Please be certain you're utilizing the most recent model of text-era-webui. This resulted in the launched model of Chat.

Chinese startup DeepSeek has built and launched DeepSeek-V2, a surprisingly highly effective language model. The big language mannequin uses a mixture-of-consultants architecture with 671B parameters, of which solely 37B are activated for every process. Almost all fashions had bother coping with this Java particular language characteristic The majority tried to initialize with new Knapsack.Item(). A Mixture of Experts (MoE) is a option to make AI fashions smarter and extra efficient by dividing tasks among multiple specialized "consultants." Instead of utilizing one massive model to handle every part, MoE trains a number of smaller models (the consultants), each focusing on particular varieties of knowledge or duties. I've worked with numerous python libraries, like numpy, pandas, seaborn, matplotlib, scikit, imblearn, linear regression and many extra. After more than a year of fierce competitors, they entered a phase of consolidation. A search for ‘what happened on June 4, 1989 in Beijing’ on main Chinese online search platform Baidu turns up articles noting that June four is the 155th day in the Gregorian calendar or a hyperlink to a state media article noting authorities that yr "quelled counter-revolutionary riots" - with no point out of Tiananmen. But even the state laws with civil liability have lots of the same problems.

If you loved this write-up and you would like to acquire far more facts regarding ديب سيك شات kindly check out our own webpage.

- 이전글 How To Tell The Pragmatic Slot Buff To Be Right For You

- 다음글 What's The Job Market For Window Doctor Near Me Professionals Like?

댓글목록 0

등록된 댓글이 없습니다.